Our Commitment to Building Trustworthy and Responsible AI

We’re committed to building AI that supports students responsibly. From clear labelling and human oversight to fairness, safety, privacy, and accountability, our principles ensure AI remains a trustworthy study partner—not a replacement for you.

This statement applies to the website StudyStash.com and all AI-powered features within our platform.

Purpose and Scope

StudyStash helps students get answers from their course materials and creates study aids. This document explains how we use AI safely and what you need to know about using our tools. AI can make mistakes - always check important information before using it in your studies.

Core Principles

We follow these key principles to keep our AI safe and useful:

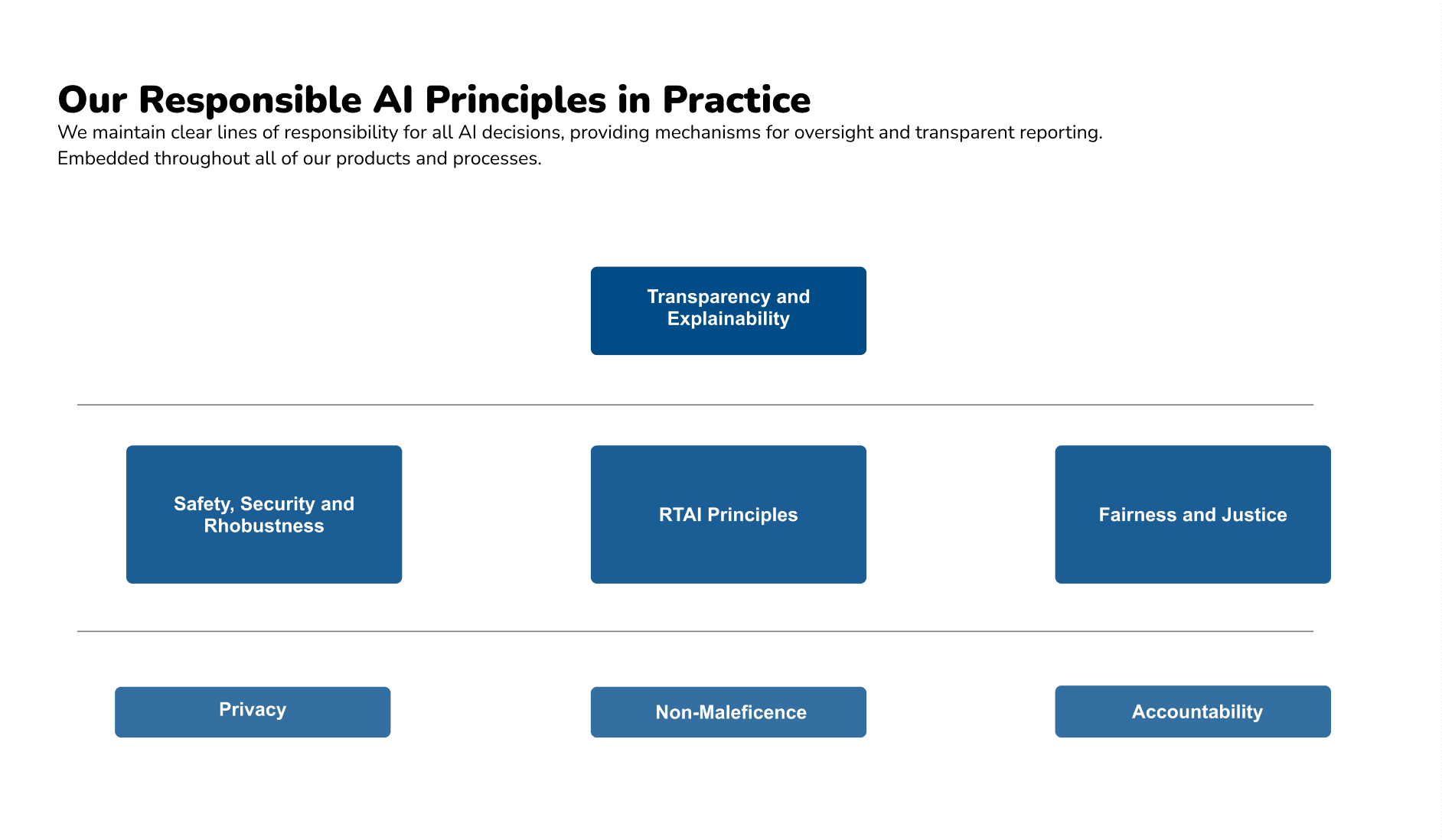

Trustworthy AI principles in practice

Here's how we put these principles into practice:

Transparency and Explainability

- AI features are clearly marked with sparkle (✨) icons

- AI-generated content is labeled so you know it came from AI

- 'Why did this appear?' buttons explain how AI found answers in your course materials

- Always check AI answers against your course materials

Reliability and Accuracy

We prioritise reliability and accuracy by making it clear that AI can produce incorrect or misleading content, and that all outputs should be carefully reviewed.

- AI-generated content may occasionally be inaccurate or incomplete. Users are expected to verify outputs against trusted course materials.

- We make it clear that AI should only be used to support study and revision based on course content, not for tasks beyond its intended use.

- All AI-generated content is editable, giving users full control to adjust, revise or remove outputs before using them.

- We recognise that AI can reflect bias and focus on use cases that reduce this risk.

- We intentionally limit AI use to educational content, avoiding areas where bias is more likely or more harmful.

- We have a document specifically on these two points which you can request at [email protected]

Fairness

We acknowledge that AI systems can reflect or amplify societal biases. To reduce this risk StudyStash intentionally limits AI use to educational content and study support while avoiding high-risk or sensitive use cases. While we design features to minimise bias we encourage users to review outputs carefully and use their judgment when incorporating AI-generated content into their learning.

Privacy and Security

- We protect your privacy and keep your data secure

- Don't include personal information when asking AI questions

- Your course data is never used to improve AI models without permission

- We follow GDPR and other privacy laws

- Find more details in our Trust Centre

Safety

We acknowledge that AI systems may occasionally produce inappropriate or unsafe content. We clearly flag within the platform that AI-generated output should be reviewed and verified before use. Students are advised to check outputs for accuracy and appropriateness and to consult their lecturers or educators if they are unsure.

Humans in Control

AI features are designed to support learning without removing human oversight. Users remain in control of when and how AI is used, and all outputs can be reviewed and edited before use. StudyStash's AI systems do not make automated decisions that have legal academic or other significant effects on individuals.

- AI features are optional and can be turned on or off as needed - for example when creating flashcards or summaries.

- AI-generated content is always editable and can be shared for peer feedback and review.

Accessibility

We are WCAG 2.2 AA compliant. View our accessibility statement for more information.

Accountability

- We work with certified AI providers to keep your data safe

- We follow GDPR and other privacy laws

- We regularly test our security to protect your information

- We monitor AI accuracy through user feedback and regular testing

- You can give us feedback on AI answers using thumbs-up/down ratings

Performance Monitoring

We monitor AI accuracy through user feedback and regular testing to assess:

- Factuality: outputs are factually consistent with trusted references

- Relevance: responses align with LMS content and address user queries effectively

- Faithfulness: verification that outputs are directly supported by input context

- Quality: rubric-based grading and semantic similarity assessments

- Operational Efficiency: API latency and cost tracking

Compliance & Governance

We follow GDPR and work with certified AI providers to keep your data safe and secure.

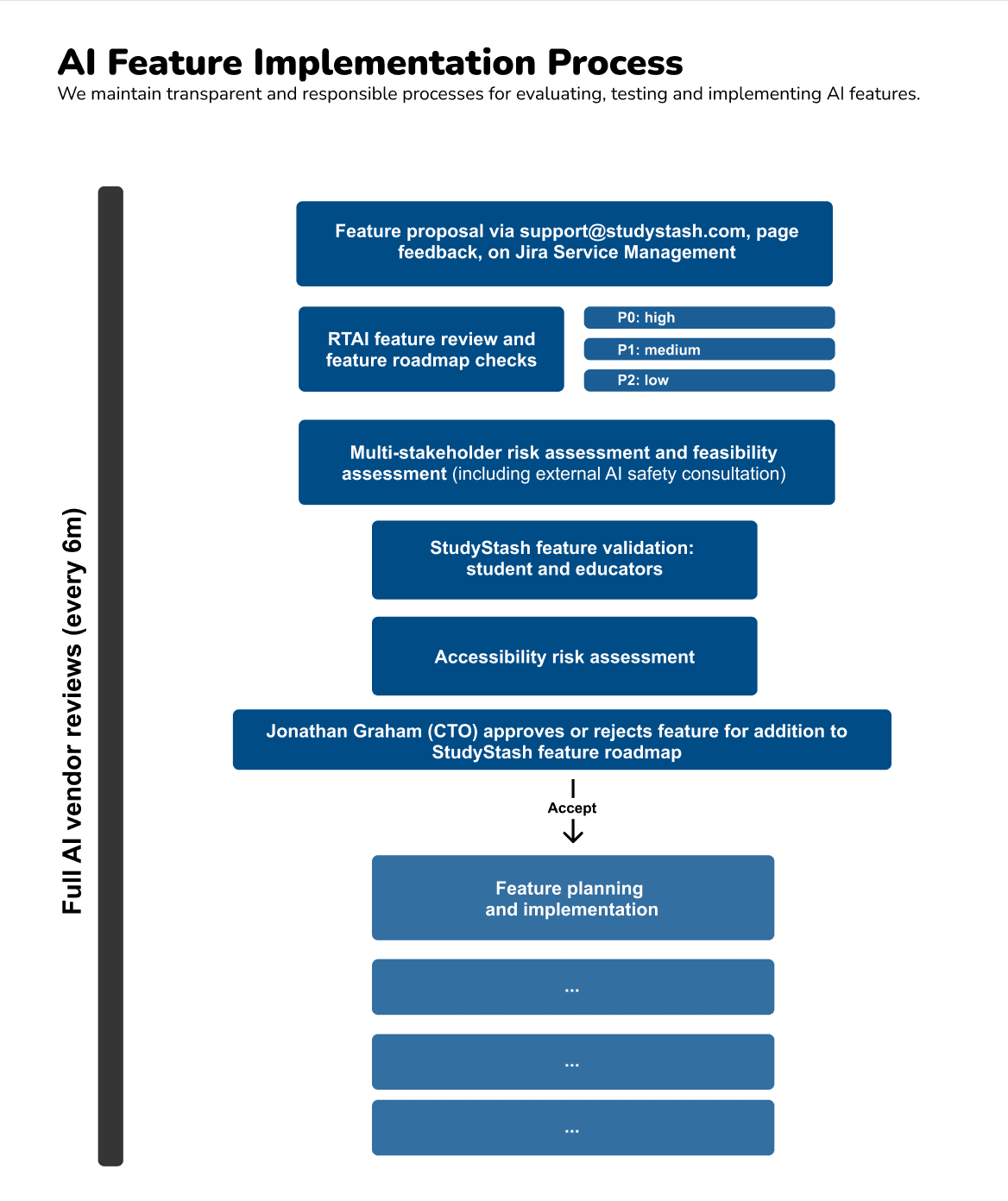

Internal Responsibilities

Our CTO, Jonathan Graham, oversees AI safety and ensures all AI systems align with our principles and commitments.

Review and Continuous Improvement

StudyStash reviews AI usage regularly due to rapid technology changes.

- Quarterly Reviews: AI systems and practices assessed against our principles and commitments

- Multi-stakeholder Input: Reviews include AI safety team, external advisors and user feedback

- Proactive Updates: Practices updated in response to new risks, findings or standards

- Industry Alignment: Staying current with AI safety research, regulatory developments and best practices

AI-powered study features

StudyStash uses LLM capabilities for these features. We do not currently use any in-house AI models and partner with certified vendors who hold ISO 27001 or SOC 2 Type II certification:

- StudyChat: AI-powered document querying for instant answers from uploaded study materials

- Practice Tests: Automated creation and marking of practice questions and assessments

- Supplementary Notes: AI-generated additional study materials and explanations

- Flashcards from Notes: Intelligent creation of flashcards from uploaded study materials

- Insights for Lecturer Reports: AI-generated insights and analytics for educators

- Document OCR & Processing: Intelligent text extraction and processing from uploaded documents

All features follow our Trustworthy AI principles with transparency and user control. AI features are identified with sparkle (✨) icons and warning flags. Institutional data is never used to train third-party or external models without explicit signed consent from institutions.

Contact and Feedback

If you have concerns about our AI systems, need information about our AI practices, want to report potential issues, or need access to our fair use policy:

- Email [email protected]

- Email [email protected]

- Visit our contact us page

- Fair use policy ensures responsible usage without restrictive limits on AI feature access. Request policy in our Trust Centre

Further information

For additional information about our AI practices and commitments, please refer to:

- StudyStash's Trustworthy and Responsible AI Framework

- StudyStash's Privacy Policy

- StudyStash's Terms of Use

- StudyStash's Accessibility Statement

Abbreviations

| Abbreviation | Full Term |

|---|---|

| AI | Artificial Intelligence |

| GDPR | General Data Protection Regulation |

| ISO27001 | International Organisation for Standardisation 27001 (Information Security Management) |

| RTAI | Responsible and Trustworthy Artificial Intelligence |